Kiln v0.13.1 Release: Vertex, Azure, QwQ, Gemma, Hugging Face, Anthropic, LiteLLM, Import CSV, and More!

Lots to check out in our latest update

Hi Kiln Early Adopters 👋,

You are getting this email because you subscribed to updates about the Kiln AI Github project.

I didn't expect this release to be so feature packed when we started, but things kept piling up in a good way. Lots of goodies here to explore:

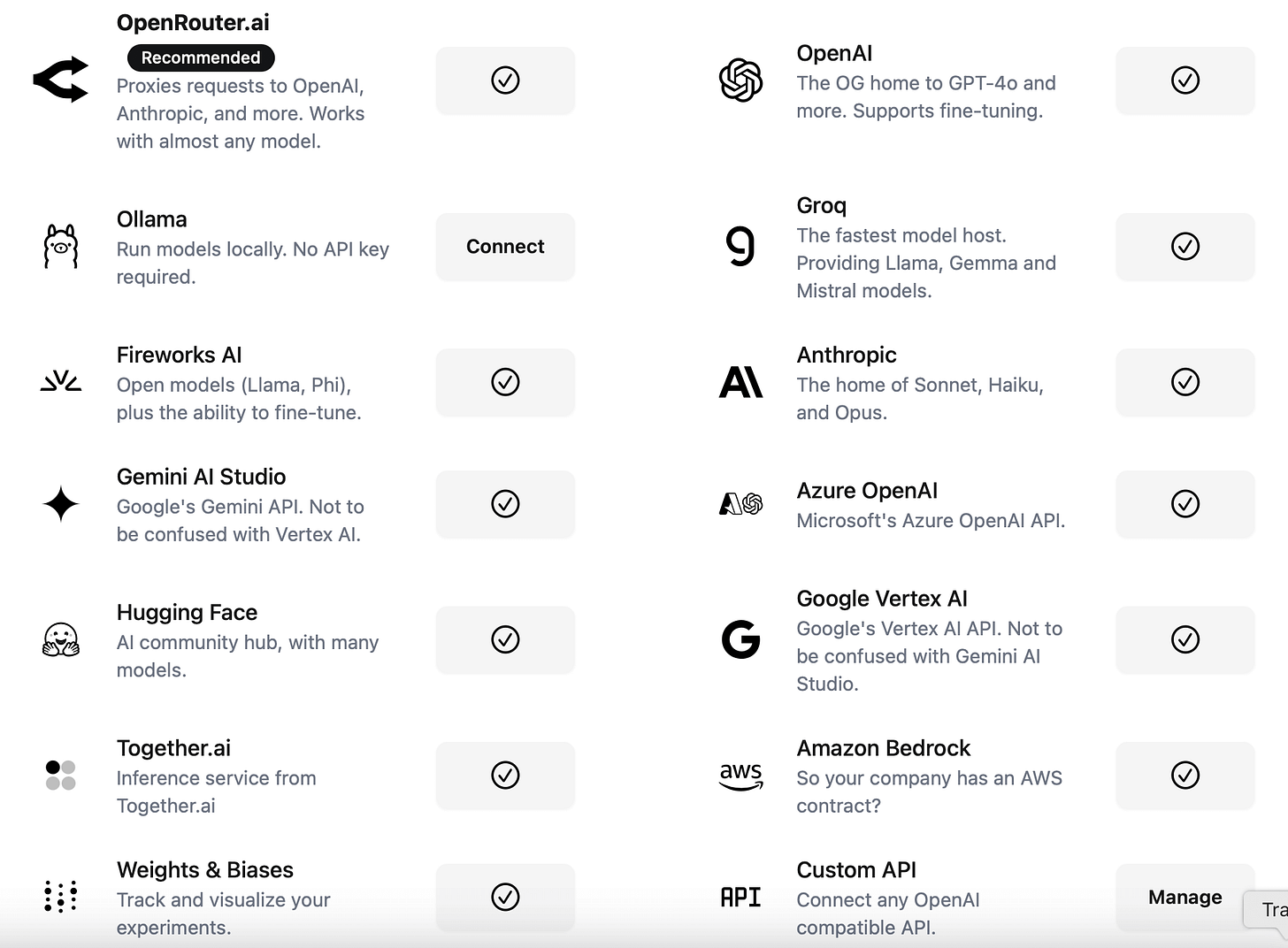

New AI Providers

You can now connect and use the following providers in Kiln:

Gemini API

Vertex AI

Hugging Face

Anthropic

Azure OpenAI

Together.ai

This is on top of our existing providers: Ollama, OpenAI, Groq, Fireworks, OpenRouter, AWS Bedrock, and any OpenAI compatible endpoint.

New Built-in Models

We have all the hip new models, each one tested to work with Kiln features like structured output and synthetic data generation:

Gemma 3 (27B, 12B, 4B, 1B): Impressive new weight-available models from Google

QwQ 32B: Reasoning you can run locally, from Qwen

o1 & o3-mini: Not new, but now generally available without an invite

Phi 4 (Mini + 5.6B): New Phi models from Microsoft

New Serverless Fine Tuning: Together.ai

We added support for fine-tuning models on Together.ai. Like Fireworks and OpenAI, they support "serverless" fine-tuning: no managing tuning GPUs or long-running inference servers. Hit "tune", then pay by token when it's inference time. It all scales to zero cost when you aren't using it which makes it great for rapid experimentation.

With Together you can easily fine-tune six models: Llama 3.2 1B/3B, Llama 3.1 8B/70B, Qwen 2.5 14B/72B.

Import CSV Files into your Kiln Dataset

Already have a dataset? The new CSV import feature makes it easy to load it into Kiln for fine-tuning and evals. Check out the docs.

Thanks to @leonardmq for contributing this!

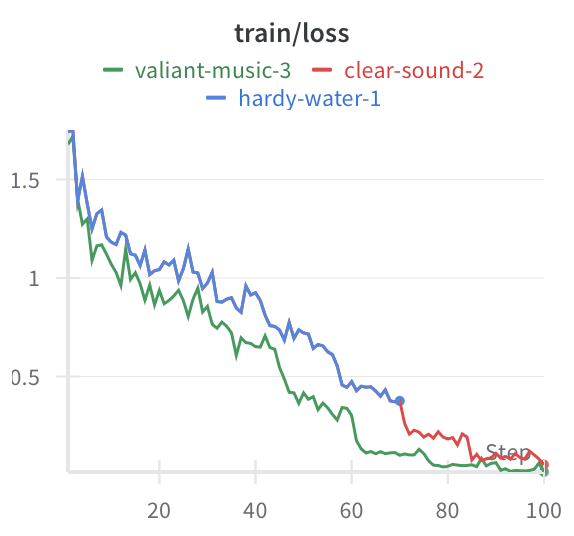

Weights & Biases Integration

We now support Weights & Biases, an open tool for tracking AI experiments metrics like training-loss and val-loss, etc. Bring your own API key, or host your own instance.

Migration to LiteLLM for Inference

We moved to LiteLLM for inference, from a mix of OpenAI+Langchain. LiteLLM has been great, allowing us to quickly add new providers, and create reusable tests across all models. I'm very happy to be done with Langchain 😂.

Fun fact: Kiln has 2,557 test cases and 92% test coverage.

On top of unit tests, we have online tests which test every built-in model against every feature to ensure things work smoothly.Even with tests, swapping out the core inference engine in a project this size can cause bugs. Please report any issues you see in Github issues.

Python Libraries

Kiln isn't just an app. Our open source python library, kiln_ai, supports essentially all the features you can see in the UI. For this update, library updates include:

All features above are available in the python library

New docs for connecting AI providers + custom models from code

Python 3.13 support, and ready for 3.14

See the library docs for details.

And More

There are lots of small quality-of-life improvements that make Kiln easier to use:

Allow editing titles/descriptions throughout the UI

Add UI for deleting items, including: evals, tasks, runs, custom prompts, projects

Bug fixes

Try it out, and as always, please give feedback on Discord.

Thanks for all your support!

Steve - The Kiln Maintainer